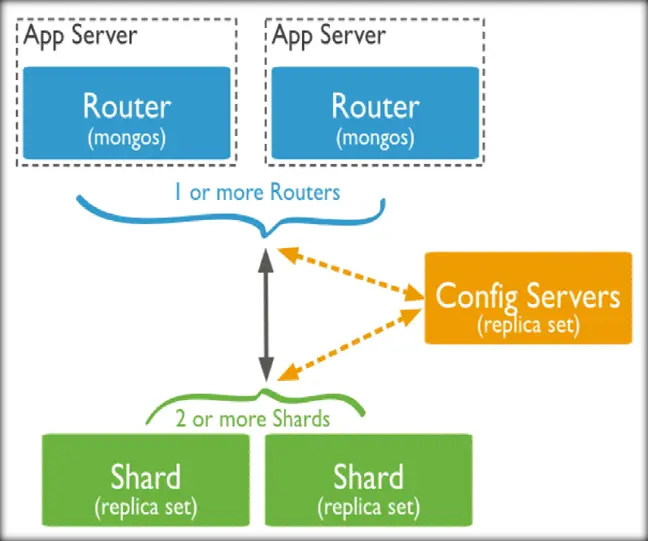

MongoDB Sharding Cluster 分片集群

环境规划

- configserver节点

28018-28020 1主2从 不支持arbiter

- 两个shard节点

sh1 28021-23 1主1从1arbiter

sh2 28024-26 1主1从1arbiter

- mongos节点

28017

shard节点配置

目录创建

mkdir -p /mongodb/28021/conf /mongodb/28021/log /mongodb/28021/data

mkdir -p /mongodb/28022/conf /mongodb/28022/log /mongodb/28022/data

mkdir -p /mongodb/28023/conf /mongodb/28023/log /mongodb/28023/data

mkdir -p /mongodb/28024/conf /mongodb/28024/log /mongodb/28024/data

mkdir -p /mongodb/28025/conf /mongodb/28025/log /mongodb/28025/data

mkdir -p /mongodb/28026/conf /mongodb/28026/log /mongodb/28026/data配置文件

sh1

cat > /mongodb/28021/conf/mongodb.conf <<EOF

systemLog:

destination: file

path: /mongodb/28021/log/mongodb.log

logAppend: true

storage:

journal:

enabled: true

dbPath: /mongodb/28021/data

directoryPerDB: true

#engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

net:

bindIp: 192.168.57.3,127.0.0.1

port: 28021

replication:

oplogSizeMB: 2048

replSetName: sh1

sharding:

clusterRole: shardsvr #rs角色 这个不要修改

processManagement:

fork: true

EOF

\cp /mongodb/28021/conf/mongodb.conf /mongodb/28022/conf/

\cp /mongodb/28021/conf/mongodb.conf /mongodb/28023/conf/

sed 's#28021#28022#g' /mongodb/28022/conf/mongodb.conf -i

sed 's#28021#28023#g' /mongodb/28023/conf/mongodb.conf -ish2

cat > /mongodb/28024/conf/mongodb.conf <<EOF

systemLog:

destination: file

path: /mongodb/28024/log/mongodb.log

logAppend: true

storage:

journal:

enabled: true

dbPath: /mongodb/28024/data

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

net:

bindIp: 192.168.57.3,127.0.0.1

port: 28024

replication:

oplogSizeMB: 2048

replSetName: sh2

sharding:

clusterRole: shardsvr

processManagement:

fork: true

EOF

\cp /mongodb/28024/conf/mongodb.conf /mongodb/28025/conf/

\cp /mongodb/28024/conf/mongodb.conf /mongodb/28026/conf/

sed 's#28024#28025#g' /mongodb/28025/conf/mongodb.conf -i

sed 's#28024#28026#g' /mongodb/28026/conf/mongodb.conf -i启动所有要创建RS的集群节点

mongod -f /mongodb/28022/conf/mongodb.conf

mongod -f /mongodb/28023/conf/mongodb.conf

mongod -f /mongodb/28024/conf/mongodb.conf

mongod -f /mongodb/28025/conf/mongodb.conf

mongod -f /mongodb/28026/conf/mongodb.conf配置集群加入

mongo --port 28021

use admin

config = {_id: 'sh1', members: [

{_id: 0, host: '192.168.57.3:28021'},

{_id: 1, host: '192.168.57.3:28022'},

{_id: 2, host: '192.168.57.3:28023',"arbiterOnly":true}]

}

rs.initiate(config)

mongo --port 28024

use admin

config = {_id: 'sh2', members: [

{_id: 0, host: '192.168.57.3:28024'},

{_id: 1, host: '192.168.57.3:28025'},

{_id: 2, host: '192.168.57.3:28026',"arbiterOnly":true}]

}

rs.initiate(config)配置config节点

创建目录

mkdir -p /mongodb/28018/conf /mongodb/28018/log /mongodb/28018/data

mkdir -p /mongodb/28019/conf /mongodb/28019/log /mongodb/28019/data

mkdir -p /mongodb/28020/conf /mongodb/28020/log /mongodb/28020/data准备配置文件

cat > /mongodb/28018/conf/mongodb.conf <<EOF

systemLog:

destination: file

path: /mongodb/28018/log/mongodb.conf

logAppend: true

storage:

journal:

enabled: true

dbPath: /mongodb/28018/data

directoryPerDB: true

#engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

net:

bindIp: 192.168.57.3,127.0.0.1

port: 28018

replication:

oplogSizeMB: 2048

replSetName: configReplSet

sharding:

clusterRole: configsvr

processManagement:

fork: true

EOF

\cp /mongodb/28018/conf/mongodb.conf /mongodb/28019/conf/

\cp /mongodb/28018/conf/mongodb.conf /mongodb/28020/conf/

sed 's#28018#28019#g' /mongodb/28019/conf/mongodb.conf -i

sed 's#28018#28020#g' /mongodb/28020/conf/mongodb.conf -i启动服务

mongod -f /mongodb/28018/conf/mongodb.conf

mongod -f /mongodb/28019/conf/mongodb.conf

mongod -f /mongodb/28020/conf/mongodb.conf配置config集群

mongo --port 28018

use admin

config = {_id: 'configReplSet', members: [

{_id: 0, host: '192.168.57.3:28018'},

{_id: 1, host: '192.168.57.3:28019'},

{_id: 2, host: '192.168.57.3:28020'}]

}

rs.initiate(config)configserver 可以是一个节点,官方建议复制集。configserver不能有arbiter。 新版本中,要求必须是复制集。

注:mongodb 3.4之后,虽然要求config server为replica set,但是不支持arbiter

配置mongos节点

创建目录

mkdir -p /mongodb/28017/conf /mongodb/28017/log准备配置文件

cat > /mongodb/28017/conf/mongos.conf <<EOF

systemLog:

destination: file

path: /mongodb/28017/log/mongos.log

logAppend: true

net:

bindIp: 192.168.57.3,127.0.0.1

port: 28017

sharding:

configDB: configReplSet/192.168.57.3:28018,192.168.57.3:28019,192.168.57.3:28020

processManagement:

fork: true

EOF启动服务

mongos -f /mongodb/28017/conf/mongos.conf分片集群添加节点

mongo 192.168.57.3:28017/admin

db.runCommand( { addshard : "sh1/192.168.57.3:28021,192.168.57.3:28022,192.168.57.3:28023",name:"shard1"} )

db.runCommand( { addshard : "sh2/192.168.57.3:28024,192.168.57.3:28025,192.168.57.3:28026",name:"shard2"} )

列出分片

mongos> db.runCommand( { listshards : 1 } )

整体状态查看

mongos> sh.status();Range分片配置

激活分片

mongo --port 28017 admin

对jcwit库开启rang分片

mongos> db.runCommand({enablesharding:"jcwit"})

{

"ok" : 1,

"operationTime" : Timestamp(1560892144, 5),

"$clusterTime" : {

"clusterTime" : Timestamp(1560892144, 5),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}指定分片键对集合分片

mongos> use jcwit;

switched to db jcwit

mongos> db.vast.ensureIndex({id:1})

{

"raw" : {

"sh2/192.168.57.3:28024,192.168.57.3:28025" : {

"createdCollectionAutomatically" : false,

"numIndexesBefore" : 1,

"numIndexesAfter" : 2,

"ok" : 1

}

},

"ok" : 1,

"operationTime" : Timestamp(1560892172, 8),

"$clusterTime" : {

"clusterTime" : Timestamp(1560892172, 8),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> use admin

switched to db admin

mongos> db.runCommand({shardcollection:"jcwit.vast",key:{id:1}})

{

"collectionsharded" : "jcwit.vast",

"collectionUUID" : UUID("0c8cc801-eceb-453e-8e41-c93fa9eb5eb9"),

"ok" : 1,

"operationTime" : Timestamp(1560892246, 10),

"$clusterTime" : {

"clusterTime" : Timestamp(1560892246, 10),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}mongos> use jcwit

switched to db jcwit

mongos> for(i=1;i<1000000;i++){ db.vast.insert({"id":i,"name":"shenzheng","age":70,"date":new Date()}); }

mongos> db.vast.stats()验证

shard1:

mongo --port 28021

db.vast.count();

shard2:

mongo --port 28024

db.vast.count();Hash分片配置

其他配置和Rang配置一样 这里id配置hashed

db.vast.ensureIndex( { id: "hashed" } )分片集群的管理

判断是否为shard集群

db.runCommand({ isdbgrid : 1})列出所有分片

db.runCommand({ listshards : 1})列出已开启分片的数据库

admin> use config

config> db.databases.find( { "partitioned": true } )

或者:

config> db.databases.find() //列出所有数据库分片情况查看分片的键

db.collections.find().pretty()查看分片详细

sh.status()删除分片

(1)确认blance是否在工作

sh.getBalancerState()

(2)删除shard2节点(谨慎)

mongos> db.runCommand( { removeShard: "shard2" } )

注意:删除操作一定会立即触发blancer。balancer操作

mongos的一个重要功能,自动巡查所有shard节点上的chunk的情况,自动做chunk迁移。

什么时候工作?

1. 自动运行,会检测系统不繁忙的时候做迁移

2. 在做节点删除的时候,立即开始迁移工作

3. balancer只能在预设定的时间窗口内运行

有需要时可以关闭和开启blancer(备份的时候)

mongos> sh.stopBalancer()

mongos> sh.startBalancer()配置自动平衡时间

use config

sh.setBalancerState( true )

db.settings.update({ _id : "balancer" }, { $set : { activeWindow : { start : "3:00", stop : "5:00" } } }, true )

sh.getBalancerWindow()

sh.status()MongoDB Sharding Cluster 分片集群

http://www.jcwit.com/article/73/